So What is Kubernetes?

as per kubernetes.io

Kubernetes is a portable, extensible, open source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

Now the question that comes to my mind is how it's different from docker

while both are used in containerization and container orchestration, respectively. While they are often used together, they serve different purposes and have distinct roles in the software development and deployment process

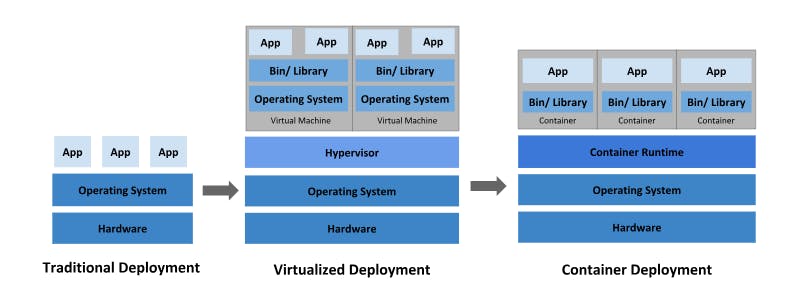

Purpose:

Docker: Docker is primarily a containerization platform. It allows you to package an application and its dependencies into a single unit called a container, which can run consistently across different environments.

Kubernetes: Kubernetes, often abbreviated as K8s, is a container orchestration platform. It is designed to manage the deployment, scaling, and operation of containerized applications across a cluster of machines.

Abstraction Level:

Docker: Docker operates at the container level. It focuses on creating and managing containers.

Kubernetes: Kubernetes operates at a higher level of abstraction. It manages containers but also handles tasks like load balancing, scaling, and self-healing for containerized applications.

Single vs. Multi-Container:

Docker: Docker is well-suited for single-container applications or microservices where you only need to manage a few containers on a single host.

Kubernetes: Kubernetes is designed for complex, multi-container applications and can manage thousands of containers across a cluster of machines.

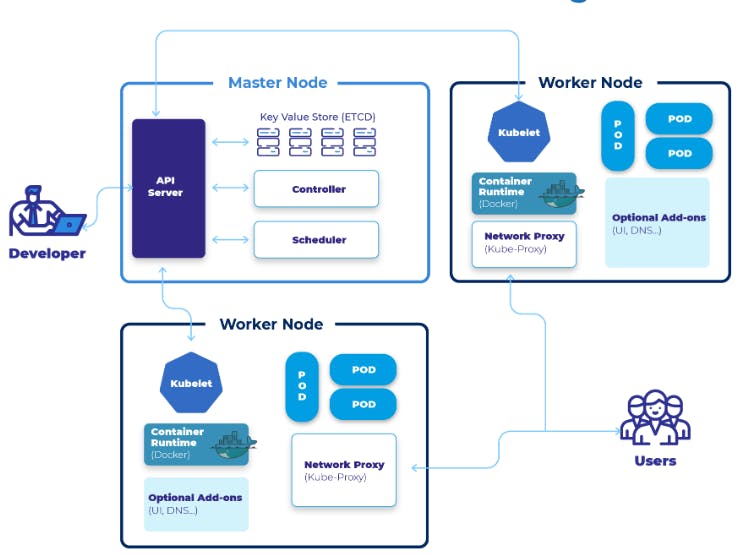

Kubernetes Architecture

When you deploy Kubernetes, you get a cluster.

A Kubernetes cluster consists of a set of worker machines, called nodes, that run containerized applications. Every cluster has at least one worker node.

The worker node(s) host the Pods that are the components of the application workload. The control plane manages the worker nodes and the Pods in the cluster. In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability.

What are nodes?

Kubernetes runs your workload by placing containers into Pods to run on Nodes. A node may be a virtual or physical machine, depending on the cluster. Each node is managed by the control plane and contains the services necessary to run Pods.

Typically you have several nodes in a cluster; in a learning or resource-limited environment, you might have only one node.

The components on a node include the kubelet, a container runtime, and the kube-proxy

What is pod?

Pods are the smallest deployable units of computing that you can create and manage in Kubernetes.

A Pod (as in a pod of whales or pea pod) is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. A Pod's contents are always co-located and co-scheduled, and run in a shared context. A Pod models an application-specific "logical host": it contains one or more application containers which are relatively tightly coupled. In non-cloud contexts, applications executed on the same physical or virtual machine are analogous to cloud applications executed on the same logical host.

As well as application containers, a Pod can contain init containers that run during Pod startup. You can also inject ephemeral containers for debugging if your cluster offers this

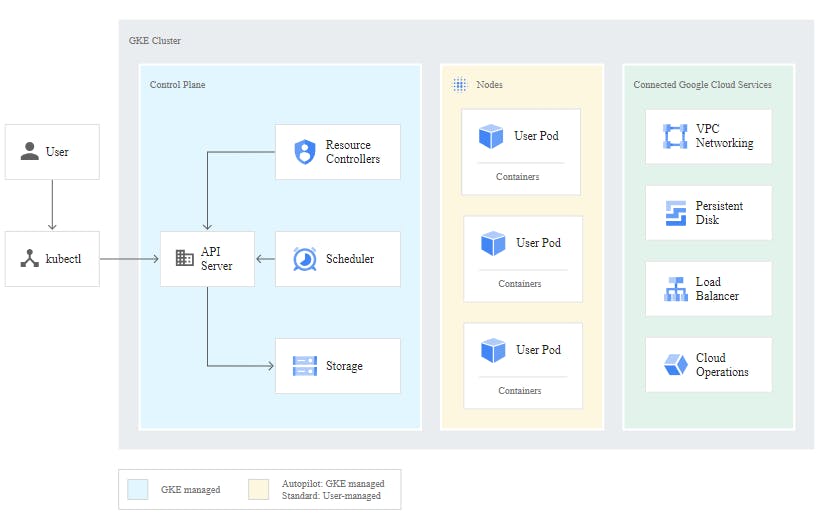

GKE Architecture

To use GKE

Enable the Artifact Registry and Google Kubernetes Engine APIs

Go to Kubernetes Engine and create a cluster

A cluster consists of at least one cluster control plane machine <master node> and multiple worker machines called nodes or <worker nodes>. Nodes are Compute Engine virtual machine (VM) instances that run the Kubernetes processes necessary to make them part of the cluster. You deploy applications to clusters, and the applications run on the nodes.

gcloud container clusters create-auto hello-cluster \ --location=us-central1

Connect to the created cluster

gcloud container clusters get-credentials hello-cluster \ --location us-central1- This command configures

kubectlto use the cluster you created.

- This command configures

Deploy a microservice to the Kubernetes using

kubectlcommandskubectl create deployment hello-server \ --image=us-docker.pkg.dev/google-samples/containers/gke/hello-app:11.0This Kubernetes command,

kubectl create deployment, creates a Deployment namedhello-server. The Deployment's Pod runs thehello-appcontainer image.

Expose the Deployment or microservice to the outside world

After deploying the application, you need to expose it to the internet so that users can access it. You can expose your application by creating a Service, a Kubernetes resource that exposes your application to external traffic.

kubectl expose deployment hello-server \ --type LoadBalancer \ --port 80 \ --target-port 8080Passing in the

--type LoadBalancerflag creates a Compute Engine load balancer for your container. The--portflag initializes public port 80 to the internet and the--target-portflag routes the traffic to port 8080 of the application.kubectl get service hello-serverFrom this command's output, copy the Service's external IP address from the

EXTERNAL-IPcolumn.http://EXTERNAL_IP

Scaling Depoyments and Resizing Pods

When you deploy an application in GKE, you define how many replicas of the application you'd like to run. When you scale an application, you increase or decrease the number of replicas.

Each replica of your application represents a Kubernetes Pod that encapsulates your application's container(s).

kubectl get deployments kubectl scale deployments my-app --replicas 4 kubectl get deployments my-appAuto Scaling of microservices or Pods

kubectl autoscale deployment my-app --max=4 --cpu-percentage=70gcloud container clusters create CLUSTER_NAME \ --enable-autoscaling \ --num-nodes NUM_NODES \ --min-nodes MIN_NODES \ --max-nodes MAX_NODES \ --region=COMPUTE_REGIONCleanUp

deleting a service

kubectl delete services my-service kubectl delete deployments my-deployment gcloud container clusters delete CLUSTER_NAME